Python环境

配置python源

编辑 C:\Users\${用户}\pip\pip.ini 文件

[global]

index-url = http://mirrors.aliyun.com/pypi/simple/

[install]

trusted-host=mirrors.aliyun.com

安装 virtualenv

最好加上豆瓣源或者阿里源:-i http://pypi.douban.com/simple/ --trusted-host pypi.douban.com

pip install virtualenv

pip install virtualenvwrapper

pip install virtualenvwrapper-win

创建纯净的python环境

# 目标地址 C:\Users\Burna\Envs\python27

mkvirtualenv --python=C:\Software\Python\Python27\python.exe python27

# 目标地址 C:\Users\Burna\Envs\python39

mkvirtualenv --python=C:\Software\Python\Python39\python.exe python39

# 切换当前环境

workon python27

# 删除python环境

rmvirtualenv py3.6

安装 scrapy

workon python39

pip install scrapy

# 查看已安装的包

pip list

scrapy入门

创建项目

C:\Burna\Workspace\Study

# 创建项目,不能以数字开头

scrapy startproject ArticleSpider

# 重命名目录名 ArticleSpider => 20220418_ArticleSpider

cd 20220418_ArticleSpider

# 创建一个新的spider

scrapy genspider cnblogs news.cnblogs.com

# 启动爬虫

scrapy crawl cnblogs

# 启动脚本(快速调试爬虫代码)

scrapy shell https://news.cnblogs.com/n/719025/

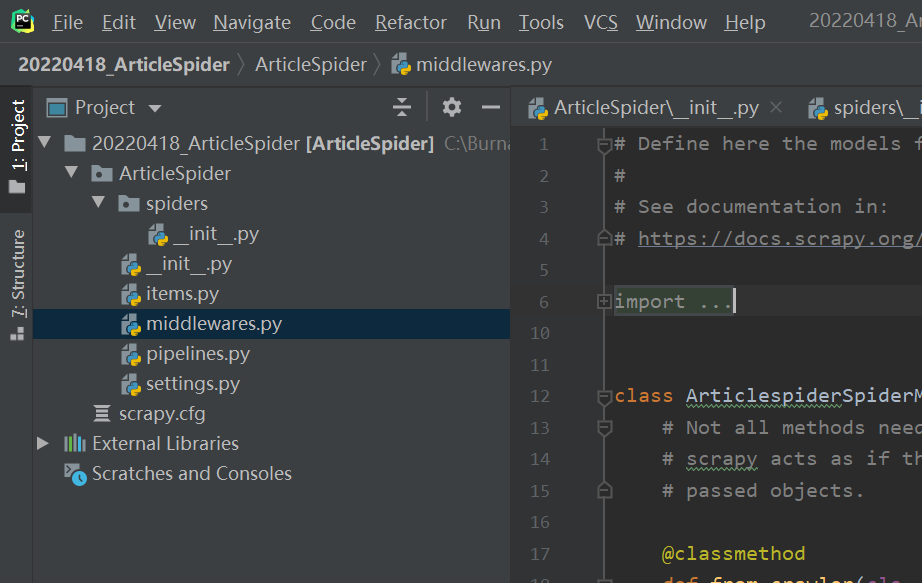

工程目录

生成代码

import scrapy

class CnblogsSpider(scrapy.Spider):

name = 'cnblogs'

allowed_domains = ['news.cnblogs.com']

start_urls = ['http://news.cnblogs.com/']

def parse(self, response):

pass

编写启动脚本main.py:

import os

import sys

from scrapy.cmdline import execute

workdir = os.path.dirname(os.path.abspath(__file__))

print("运行目录 => " + workdir)

sys.path.append(workdir)

execute("scrapy crawl cnblogs".split(" "))